Bryce Dixon

Game Designer and Programmer Specializing in

UX and System Design

Gameplay and Editor Programming

Game Designer and Programmer Specializing in

UX and System Design

Gameplay and Editor Programming

I started teaching myself programming with online Java tutorials when I was 8 years old. In highschool, I moved over to C, later graduating to C++ in my time at DigiPen Institute of Technology. I've been working with teams of 2-9 developers for the past 5 years and produced 4 shipped group projects.

I have proficiency in these technologies:

I am also familiar in these technologies:

Summer 2020-Winter 2021

I was employed as an programmer on the animation runtime team at 343 Industries working on Halo Infinite. I was responsible for implementing fixes for animation systems and directly communicating with the animation staff to find appropriate resolutions to technical problems. Currently, due to NDA I am unfortunately unable to disclose many specifics about what work I've contributed, but this may be updated at a later time.

Spring 2020

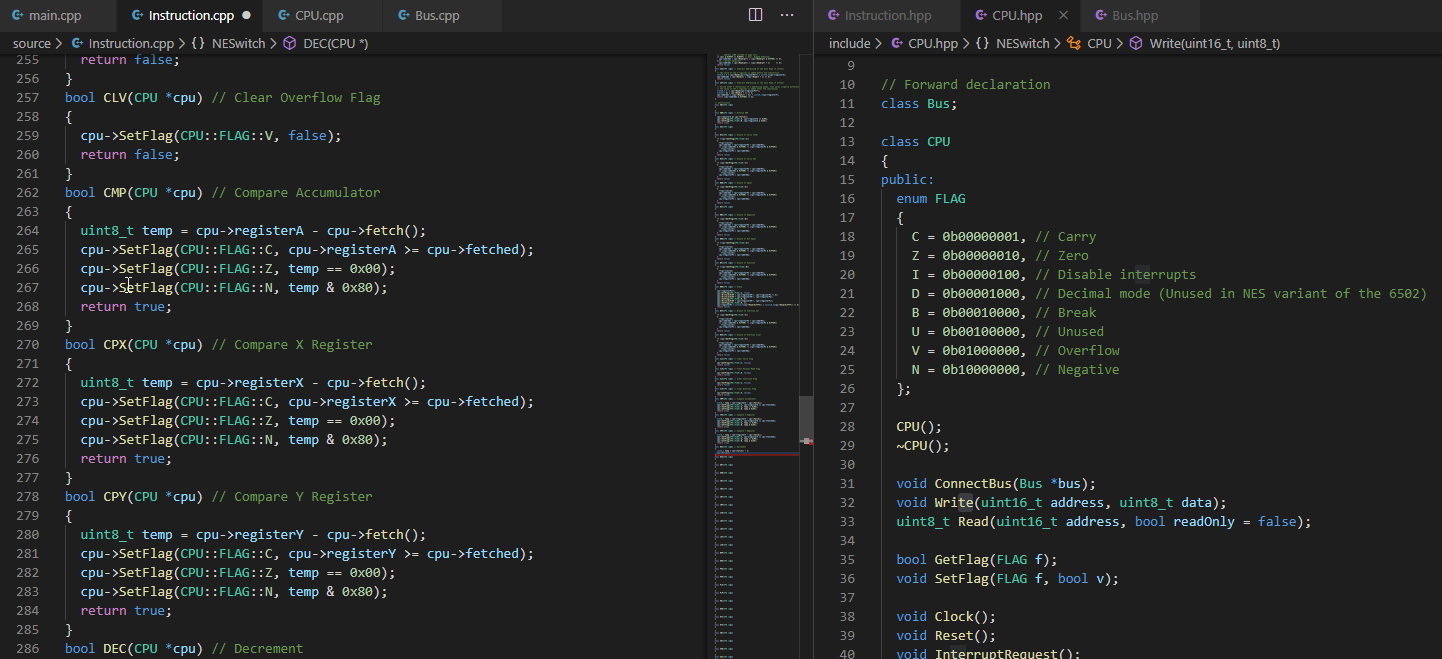

Team size: 1

My final academic project was an NES emulator for Windows with the target demographic of modern-day NES developers. Key features include multiple memory views (with live editing), clock-cycle accurate emulation, and the ability to place breakpoints; all intended to improve turnaround time for testing and debugging.

Fall 2019

Team size: 2

TBA

Summer 2019

2D C++ Class-Based Object Oriented Game Engine built on top of SDL2. It currently has Windows build support through MinGW and features graphics wrappers, built-in player controllers, music and soundeffect playback, input management, event management and handling, gamestate management and creation, physics, and collision detection/resolution.

SourceFall 2018 - Spring 2019

Team size: 6

TBA

Spring 2018

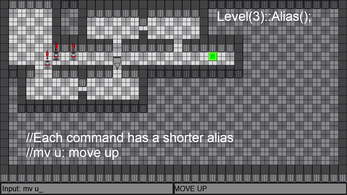

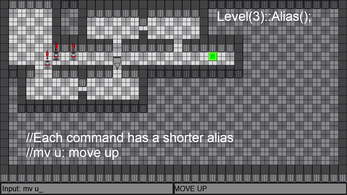

Created for Ludum Dare 41 with the theme "Combine 2 Incompatible Genres." I decided to create a mix of real-time stealth/puzzle games and more methodical programming/shell simulators.

DownloadFall 2017 - Spring 2018

Team size: 9

Simple 2D platformer with an origami-inspired artstyle and puzzles highlighting a transformation mechanic

DownloadSpring 2017

Team size: 1

This was a project I made for my 2D game design class at DigiPen. The game is multi-device and cross-platform (Windows and Android for the controllers, Windows is required for the server) and involves up to four players cooperatively defeating every enemy in each level by playing cards from their own customized deck.

Spring 2017

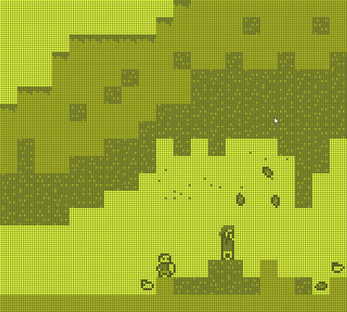

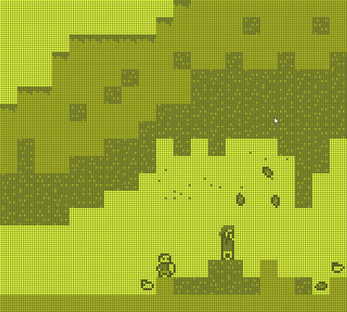

Created for Ludum Dare 38 with the theme "It's a Small World." Pocket World is a Gameboy inspired sandbox "walking simulator" with all of the original system's limitations with 4 colors at a resolution of 160x144. Many parts of the environment are destructable, rewarding item drops that can be used to "craft" more chunks of the world to explore. Characters provide quests to the player that allow trading of common items for rarer ones with the eventual goal being a completed map.

I created the game's dialogue system from scratch to support a custom, simplistic scripting style to allow for rapid prototyping, iteration, and the potential of light modding. More information is on the download page.

Summer 2016 - Fall 2017

A C/C++ homebrew library for the Nintendo 3DS. Originally created as a wrapper for the SF2D library, it expanded to gain support for animations, cameras, collision, text, and more. Support was dropped after SF2D became depricated.

Source

Spring 2016

This game was made for the LowRezJam. The challenge was to make a game with the size of 64 pixels by 64 pixels, but I took it a step further and went with only 32 pixels by 32 pixels. At times, it was a struggle - no actually, most of the time it was a struggle.

Inspired by Conway's Game of Life, this gamifies the concept by creating different types of cells that perform unique tasks. Harvesters grant points, Farmers make food, Defenders protect your colony, and Enemies can invade at later stages.

Spring 2014

My first gamejam entry for Ludum Dare 31 for the theme "Entire Game on One Screen." I decided to interpret the "One Screen" as the player's monitor, so the camera is manually controlled by dragging the window around your screen.

Download

Fall 2019

Team size: 1

Solo narrative project following Jacob, a freshman in college who suffers anxiety and PTSD after an armed robbery took place in his early childhood. Players experience four days of his entry into college with his undiagnosed and untreated mental illnesses worsening due to his newfound isolation. The story concludes with Jacob reaching out to his friend, Cat, who supports him in seeking treatment.

Fall 2018 - Spring 2019

Team size: 6

TBA

Spring 2018

Created for Ludum Dare 41 with the theme "Combine 2 Incompatible Genres." I decided to create a mix of real-time stealth/puzzle games and more methodical programming/shell simulators.

DownloadSpring 2017

Team size: 1

This was a project I made for my 2D game design class at DigiPen. The game is multi-device and cross-platform (Windows and Android for the controllers, Windows is required for the server) and involves up to four players cooperatively defeating every enemy in each level by playing cards from their own customized deck.

Spring 2017

Created for Ludum Dare 38 with the theme "It's a Small World." Pocket World is a Gameboy inspired sandbox "walking simulator" with all of the original system's limitations with 4 colors at a resolution of 160x144. Many parts of the environment are destructable, rewarding item drops that can be used to "craft" more chunks of the world to explore. Characters provide quests to the player that allow trading of common items for rarer ones with the eventual goal being a completed map.

I created the game's dialogue system from scratch to support a custom, simplistic scripting style to allow for rapid prototyping, iteration, and the potential of light modding. More information is on the download page.

Spring 2016

This game was made for the LowRezJam. The challenge was to make a game with the size of 64 pixels by 64 pixels, but I took it a step further and went with only 32 pixels by 32 pixels. At times, it was a struggle - no actually, most of the time it was a struggle.

Inspired by Conway's Game of Life, this gamifies the concept by creating different types of cells that perform unique tasks. Harvesters grant points, Farmers make food, Defenders protect your colony, and Enemies can invade at later stages.

code

cs

C++

architecture

code

cs

games

theory

code

cs

C++

optimization

data-oriented

object-oriented

code

cs

games

theory

cs

course

theory

games

gamedevember

community

code

c++

code

c++

debugging

games

gamedevember

community

code

c++

games

history

research

code

c++

code

exploit

theory

In many C-inspired languages (I will be focusing on C++), the existance of null pointers implies the requirement of validating all pointers, but most languages do not offer a standard method of ensuring validation has taken place. This can easily lead to either redundant or insufficient pointer validation, but this problem extends to any variables with potential constraints (eg: an int with a strictly valid range).

Take the following example code snippet:

#include <iostream>

void f3(int* x)

{

if (x == nullptr)

{

return;

}

std::cout << x << '\n';

}

void f2(int* x)

{

if (x == nullptr)

{

return;

}

f3(x);

}

void f1(int* x)

{

if (x == nullptr)

{

return;

}

*x += 5;

f2(x);

}

It's clear that f2 and f3 revalidating their input parameter is redundant and will end up wasting runtime. However, the reverse is also true; imagine we had the following function signature from a precompiled library (we can't see the definition): bool f(int* x);

Are we supposed to do the pointer validation or will the function do it? If the supposed internal pointer validation fails, will the function throw an exception or just return false? These things might be answerable by the documentation, but now we'd be relying on an external document which may or may not be accurate to our specific version of the library.

At its core, this type is a simple template wrapper for raw pointers (Full Source Code; run it on Compiler Explorer):

struct prevalidated_not_null_t {};

constexpr static prevalidated_not_null_t prevalidated_not_null;

template <typename T>

concept mutable_pointer = std::conjunction_v<

std::is_pointer<T>,

std::negation<std::is_const<std::remove_pointer_t<T>>>

>;

template <mutable_pointer T>

class not_null<T>

{

public:

using pointer_type = std::remove_cv_t<T>;

using data_type = std::remove_pointer<pointer_type>::type;

using ref_type = std::add_lvalue_reference<data_type>::type;

constexpr not_null(const pointer_type& x) : m_x(x)

{

// Single point of validation

if (!bool(m_x))

{

throw std::invalid_argument{ "not_null cannot be constructed from nullptr" };

}

}

constexpr not_null(pointer_type&& x) : m_x(x)

{

// Single point of validation

if (!bool(m_x))

{

throw std::invalid_argument{ "not_null cannot be constructed from nullptr" };

}

}

// For external validation. Similar to std::optional::operator*() vs std::optional::value()

constexpr not_null(pointer_type x, prevalidated_not_null_t) : m_x(x) {}

constexpr not_null(const not_null& other) : m_x(other.m_x) {}

constexpr not_null(not_null&& other) = delete; // Moving is forbidden

constexpr not_null& operator=(pointer_type x)

{ m_x = not_null{ x }; return *this; }

constexpr not_null& operator=(const not_null& other)

{ m_x = other.m_x; return *this; }

constexpr not_null& operator=(not_null&& other) = delete; // Moving is forbidden

[[nodiscard]] operator pointer_type() const { return m_x; }

[[nodiscard]] ref_type operator*() const { return *m_x; }

[[nodiscard]] pointer_type operator->() const { return m_x; }

private:

pointer_type m_x;

};

Using it is simple and makes it clear that a function expects a non-null pointer from its signature alone:

void f_potentially_null(int* p); // This should perform its own validation

void f_not_null(not_null<int*> p); // This will not perform validation

int main()

{

// Can't construct not_null without a value

// not_null<int*> p0;

// not_null<int*> p0{ nullptr };

int i{ 5 };

// This works, but potentially checks `pi != null` many times down the callstack

int* const pi{ &i };

f_potentially_null(pi);

f_potentially_null(nullptr); // Will do nothing (potentially silently)

// This creates a clear boundary across scopes that the pointer has been validated

not_null<int*> p_nn{ &i };

f_not_null(p_nn);

try

{

f_not_null(nullptr); // Will throw

}

catch (std::invalid_argument e)

{}

// Avoids using exceptions through external non-nullptr prevalidation

if (pi != nullptr)

{

f_not_null(not_null(pi, prevalidated_not_null));

}

}

This can also be expanded for pointers to const data and smart pointers like std::shared_ptr and std::unique_ptr (see the full source code for those implementations).

Using the language's static typing in this way allows us to better communicate with other developers (and our future selves) what the expectations and requirements of each function are and in doing so explicitly determine who should take responsibility for validating a given variable.

It was pointed out that, at least for pointer function arguments, some of these issues are already solved by references, which is true. void f(int&); cannot be passed a null pointer and therefore does not need to perform any validation. However, there are two cases that come to mind for which references cannot be used to the same effect:

new)

struct S{ int* i; };

S f(int* i)

{

// i is not initialized yet, so we can't take a reference to it

S s{ new(i) int };

// Now we can use (*s.i)

return s; // the caller will retain ownership of i, now pointing to initialized data

}

This is an admitedly very specific version of common practices in custom memory pools and linear allocators. For example, we could have the function void allocate(T*); which will construct a variable of type T for us at the given pointer, but if we were to pass a null pointer of an arbitrary type (technically just passing nullptr can be handled by explicitly overloading to handle std::nullptr_t) then there's no guarantee it will be checked before it's used. By instead making the function void allocate(not_null<T*>); we can ensure the address being initialized will be valid.

The other example is much more common in my experience. Say we want Characters in a game to be able to reference their Location in the world, but that Location can change (think chunks in a game like Minecraft). We can't just give our Character class a Location reference since we want it to potentially change, but we also don't want it to be a raw pointer because it could potentially be invalid (and we want it to always be valid), so we can use not_null as a wrapper to safely avoid checking just in case.

struct Location;

struct Character

{

not_null<Location*> location;

};

By adhering to this pattern, we assure ourselves of a few things:

Character::location will always be validLocation must exist before a Character canLocation's lifetime to end, all Characters referencing it must also have their lifetimes end without having them strictly be owned by the LocationBefore continuing, understand this is not intended to be a crash-course for everything you need to understand all of networking. Before reading this article, you should already understand IP, how to use a text console/shell (eg: BASH), DNS, and anything you'd need to open/forward ports for a server to listen for and respond to connections.

If any of those caused your brain to play the sound of a 56k modem dialing in a server I'd highly recommend looking into the basics of networking and server management first. Try to host your own website using NGINX on a bare installation of Linux (I'd recommend Ubuntu for its simplicity), then come back.

All games (and programs, for that matter) can be broken up into various systems that work together to create a cohesive experience. Those systems are usually fairly comprehensive and for many projects can even be totally owned by a single person. Some examples of systems and their responsibilities in a game include:

You may notice that the description for each of those systems is longer and usually more abstract or project-specific than the last. Admittedly, this is because I'm intentionally manipulating my descriptions to cause that phenomenon, but I do so to highlight an interesting quirk about these systems and how they relate to game development as a whole:

My key point being: "networking" is a multifaceted field of development requiring a constant awareness of every level of every step of every message being sent or received. This isn't in an attempt to scare you out of trying to create a networked game, but to instill an expectation that at some point things will not go to plan, sometimes by fault of something you cannot control, and you'll have to find a mitigation or alternative solution.

These are two terms you should become familiar with before moving forward. In short, scaling "vertically" means "use a bigger machine" while scaling "horizontally" means "use more machines." Most problems are best solved in either of these ways, but not both. For example:

The term "shard" originally comes from Ultima Online. A more generic term would be "channel," but I tend to stick with "shard" since it's been adopted by many existing MMOs and holds some MMO-specific connotations.

In short, each "shard" is an identical copy of the game server software running simultaneously in a server hardware cluster. This brings a massive horizontal scalability benefit: if each shard can only handle 20 active connections, we can simply add more servers to run more shards to increase our maximum player count. In a proper setup, this could even be done automatically by dynamically opening and closing new shard servers as the total player count fluctuates.

To answer this I'll propose a hypothetical situation: I'm running 20 shard servers across the world, what IP address do clients connect to? For this situation, it's impossible to know.

An easy solution is to create one static "master" server that clients can connect to and receive the list of available shards. Then each client can choose a shard to connect to. Alternatively, the master server can provide a specific shard to connect to rather than a list of all available shards, so load balancing can be controlled by us, but that won't allow players to choose who they're playing with, which would lead to a potentially negative gameplay experience.

Note: Using DNS can allow you to use a dynamic IP address, by "static" I just mean there's some known location (eg: "google.com") that clients can always connect to.

If it's not obvious by now, a sharded architecture is significantly more complex than a single-server design. On top of the existing complexity, we still have a bottleneck in our master server, so we need to ensure that it does as little work as possible to prevent any large delays. Keep that in mind as we move forward.

So our client has now connected to Shard 1 and played for a bit and now they want to switch to Shard 2 because their friend has just logged on. How can we ensure that their progress will carry over? What about when they log out and come back tomorrow?

The first solution is to create a persistant database through something like SQL, but copying an entire database to every shard server and ensuring they're all synced up every time a player switches shards would be a major waste of bandwidth. The next step up would be to have the master server or some other tertiary "database" server be responsible for holding and directly interacting with the database with commands being provided to it by the master and each shard. A step above that would be to implement sharding in our database as well to prevent a high load from causing a bottleneck.

There are multiple ways to shard a database. We could completely copy the whole database between multiple servers and have a master server route requests and responses. This would allow us to distribute the load for read requests (eg: a SELECT query could be handled by any database server), but we'd still need to ensure all write requests are given to every database for data accuracy (eg: if a INSERT query is given to DB 1 but not DB 2, multiple SELECT queries could return different responses).

Let's say we have multiple tables within our server. This would allow us to split up each table to its own server which would make updating data significantly lighter as we don't need to ensure parity across identical servers (though we could and should still have multiple copies of the same database shard available to allow for data redundancy in the event of a crash or other failure). Though, this does come with the downside that complex read requests (eg: any JOIN) would need the different database shards to share entire tables which could result in incredibly slow queries, so I would only recommend this if your specific implementation is either high-write, low-read or you can manage the tables being split such that any cross-table communication can be done without further networking (eg: by copying commonly joined tables across multiple database shards). If you're going that route you're probably a far better database engineer than I am, so good luck to you.

We've got our rough plan, but now we need to tell the master server where the shards and database(s) are. We could manually list them all in a configuration file, but that would be prone to mistakes and generally cumbersome. A better way would be to have the shards tell the master server where they are, since the master server is at a static location anyway. However, this opens us up to a new problem: what if a malicious third-party tries to mimic one of our shards? We can use a secure handshake to ensure only our shards are recognized by our master.

The simplest handshake design would be to manually generate an RSA keypair for every shard server and provide the public keys to the master server. Any time a shard tries to connect to the master, it encrypts some known word (eg: "Shard") with its private key and sends that to the master. If the master can decrypt the expected word from the received message using any of its held public keys, then we know that shard is one of ours. The upside to this is that it's simple, but the obvious downside is it prevents automation due to the need to manually copy the generated public key.

An alternative would be to go the other way: we generate one RSA keypair for the master server and provide the public key to all shards. However, if we try to send the same encrypted message for every shard, a man in the middle could intercept that message and be able to impersonate our shards without needing access to either of our keys. To solve this, we have the master server send an encrypted challenge to the shard which replies with the encrypted solution. This zero-knowledge proof allows the shard to prove they have the public key (it can decrypt the challenge and encrypt the solution) without creating a reproducible result or revealing the public key (the challenge and solution should be different every time). This challenge also doesn't need to be difficult, just unique on every connection. It could be as simple as "solve this algebra problem" or even "reply with the same message."

This same system can be performed for different types of connections (players can request a "player" handshake, database servers can request a "database" handshake, etc.) so long as each uses a unique public/private keypair.

Back Talk to me about this post

My original tweet

Project source

My Livestream

std::array std::vector std::tuple Parameter pack (variadic templates)

Object-Oriented Programming Data-Oriented Design

Back Talk to me about this postThere are two potential answers to this question:

In a word: everything. At least on the technical side - MMO gameplay design is a whole field of study which I don't yet feel confident enough to tackle.

This does mean the series will be ongoing for quite a while, but I want to be sure everything is covered in detail.

Not the answer you want to hear, but I'm not sure. It took me about 140 hours of development on my own project to get to a point where players can log into the game and chat - and that's without anything to *actually* do, so there's a lot to talk about.

I'll try to cover everything generally with lots of visual aids, but for specific implementation details I'll be using the Godot game engine which is totally free, cross-platform, and open-source.

Currently, planned topics to be covered include the following in no specific order:

This post (and all future posts tagged with "course") are intended to be educational material in the field of computer science. This differs from many video/text guides under the description of "programming" or "coding" tutorials, as it will have a greater focus on CS theory rather than any specific programming language's syntax. In fact, I'm currently planning to avoid programming languages until I finish the CS1XX series and begin CS2XX, so there will be little, if any, coding discussed until then (though pseudo-code will be used to efficiently get concepts across).

My goal in this is to offer functionally useful skills and knowledge to anyone who's interested enough to read about it. Courses focusing on programming, in my eyes, have a huge drawback: no one wants to hire a programmer who has little to know computer science background. Learning a programming language, even proficiently, without knowing computer science is effectively worthless; it'd be like learning a foreign language without ever talking to anyone else who understands it. It's like being given a magic calculator that can solve any problem you can write, but not knowing what equasions are used in which context. Computer science can be picked up incidentally through the active use of programming languages, which is why I personally don't care if someone is self-taught or has a college degree so long as they have the practical knowledge and ability, a sentiment shared by many in-industry, but that doesn't mean anyone will wait for you to get to where they expect you to be.

I have a belief that there is one aspect of computer science that sets it apart from most fields of study: because it's somewhere between discovered sciences, like chemistry and physics, and invented sciences, like mathematics, anyone who knows the very basics of the field has the potential to derive everything else on their own. It's that idea that will drive this course's design; the rest of this article will go into detail regarding the very basics of CS in a fairly straightforward and explanatory way, but the rest will be written in a way to promote you coming to the idea on your own before reading it. As such, each section will contain exercises to practice concepts. I would highly recommend at least attempting each exercise; I took the time to write them out, so it's reasonable to assume I wouldn't waste my time or yours by inventing pointless work, but I'm not exactly in the position to require anything of anyone reading this. However, if you're still reading by this point, I think it's fair to assume you have enough interest in the topic that I can expect you to put some time into practicing the concepts. When you get to a bolded section, stop and try to reason your way through the questions being asked.

Be warned: this is intended as a college-level resource. This won't be written as a typical "intro to programming" video or series that walks you through basic concepts. You're expected to put effort into reasoning through ideas and concepts before or as they're introduced. That being said, if a section or concept seems too confusing, you are welcome to email me using the button at the bottom of the article.

With that intro out of the way, let's get on to the first concepts of computer science:

When talking about the invention of computer science as a field of study, most people will at least recognize the name Alan Turing, who we will get back to shortly. However, before Alan Turing, there was his academic advisor and mentor Alonzo Church who invented a form of mathematics called lambda calculus. The idea behind lambda calculus is that logic can be used to break down a mathematical expression or concept to make them easier to analyze and construct. For example:

Imagine a mathematical problem such as finding the volume of a cone where the formula is expressed as:

Vcone(r, h)=π×r2×h⁄3

In what ways is this formula confusing? Why would it be useful to know this? Is that knowledge reusable or transferrable to other problems?

Through lambda calculus we could try breaking this down into smaller functions as follows (understanding the exact syntax is not important):

λr.(π×r2×λh.(h⁄3))

...or in a potentially more familiar syntax:

fcone(r,h)=π×r2×gcone(h)

gcone(h)=h⁄3

Now we can easily recognize that the simpler function fcone(r,h) actually consists of the area of a circle followed by one extra step (multiplying by gcone(h)).

What can that lead us to learn about gcone(h)? It obviously relates to the cone's height in some way, but why divide by 3? Outside of why is it there, why is it useful to know these things?

To understand why f(r,h) ends up multiplying by g(h), we should look at one more, similar function: the volume of a cylinder:

λr.(π×r2×λh.(h⁄3))

...or in a potentially more familiar syntax:

Vcylinder(r, h)=π×r2×h

λr.(π×r2×λh.h)

fcylinder(r,h)=π×r2×gcylinder(h)

gcylinder(h)=h

How do Vcylinder(r, h) and Vcone(r, h) relate?

The truth is that Vcone=Vcylinder×1⁄3. This is a fairly mathematically simple example, but it illustrates the usefulness of lambda calculus: by breaking down complex problems into simpler problems, we can gain better understanding of how different complex problems relate to each other.

Alright, back to Alan Turing. As stated before, Turing studied under Church and therefore it's no surprise that he took a similar interest in the analysis of computation. Together, they wrote the Church-Turing thesis which outlines the concept known as a Turing Machine - a theoretical description of a device that can compute anything that is computable.

What does it mean for something to be computable? What's an example of a question that is not computable? What are the requirements of a device for it to compute anything computable (what's the list of every possible action)?

Alan Turing came to a surprisingly terse list of actions that are required for something to be considered able to compute anything computable, also known as being "Turing complete". The machine must be able to:

Read its internal state and some external information (eg: input and intermediate/temporary variables).

Write its internal state and some external information (eg: output and intermediate/temporary variables).

Act differently depending on what it can read using a table of instructions.

This video by Ben Eater goes into the details of this requirement set, such as why its contents are what they are.

A Turing Machine's instructions are provided in a table. At every update, the Turing Machine will search that table for a line that its current state fulfils, do that, then start over. It does this until it's told to stop (or "halt"). Here's an example of what a Turing Machine's instructions might sound like:

If the state is A and the head is at a 0, write a 1, set the state to B, and move the head left.

If the state is A and the head is at a 1, write a 2, set the state to B, and move the head right.

If the state is A and the head is at a 2, write a 0, set the state to C, and move the head left.

If the state is B and the head is at a 0, set the state to A, and move the head left.

...

If the state is C and the head is at a 2, halt.

What are some limitations of a Turing Machine? Can you think of a problem that a Turing Machine might not be able to compute? If so, why can't the Turing Machine compute the answer? Is the problem just unsolvable, or is the Turing Machine just too limited? How could we make improve the Turing Machine?

The biggest differences between a Turing Machine in concept and modern computers are that:

A Turing Machine can only read one item of external information at a time - wherever its head is.

Since the head can only move one position at a time, moving between pieces of data can be a lengthy process.

In contrast, modern computers can read/write any available information via their address (this will be explained further later).

A Turing Machine reads through a table of instructions to determine what action should be taken for each step.

This can make each step fairly time consuming.

In contrast, modern computers are less context-sensitive. They follow a list of pre-determined instructions rather than looking up the next instruction based on their current state.

As Turing Machines are primarily a conceptual idea rather than a physical object, they can use an infinitely long tape for memory.

This provides an obvious advantage for computing complex problems.

In contrast, modern computers have limited memory which needs to be kept in mind when designing algorithms for them. It's commonly said that you can optimize an algorithm to either: be fast and use more memory; or be slow and use less memory. This tradeoff is known as "speed vs size."

Most human cultures use a numerical system known as "base ten;" more specifically, "base ten positional notation." We call it "base ten" because there are ten different unique digits (0, 1, 2, 3, 4, 5, 6, 7, 8, and 9) and the position of each digit in a number determines the final value of a number (eg: 12 is different than 21) where each digit is multiplied by the numeric base. This contrasts with a system like tally marks where the position of each mark does not provide additional information about the value being shown. For example:

123

= 100 + 20 + 3

= 1 × 100 + 2 × 10 + 3 × 1

= 1 × 102 + 2 × 101 + 3 × 100

This system is also commonly called "decimal."

However, this is not the only way to read, write, and work with numbers. It may seem like the most efficient or easiest method, but different systems are better for different situations. For example, some cultures use "base twelve" aka "duodecimal" or "dozenal" notation. Within the field of computer science, alongside decimal, we also use three other numeric systems:

How can we use these different numeric systems? Why might it be easier to do some things in a different base?

The first thing everyone learns with any numeric system is counting, so let's start there.

Counting in octal is fairly simple coming from decimal, so let's start there. To count in octal, do the same thing as normal, but skip the numbers "8" and "9". For example: #0, #1, #2, #3, #4, #5, #6, #7, #10. Now, note that #10 in octal is not 10 in decimal; we aren't suddenly skipping certain values. Recall how positional numbers are evaluated in decimal, and apply those steps to octal (I'll be mixing octal and decimal, so pay attention to what numbers have a "#"!):

#14

= #10 + #4

= 1 × #10 + 4 × #1

= 1 × 81 + 4 × 80

= 12

Try converting the following numbers from octal to decimal: #20, #32, #100, #236.

Once you've got the hang of that, move on to binary. It's very similar to how octal was just decimal without two numbers, except now we only have two numbers. For example: 0b0, 0b1, 0b10, 0b11, 0b100, 0b101, 0b110, 0b111, 0b1000. One nice thing about binary is that it's easy to count on your fingers (try it out if you need some practice). To convert between binary and decimal, use the same process as before:

0b10100101

= 0b10000000 + 0b100000 + 0b100 + 0b1

= 1 × 27 + 1 × 25 + 1 × 22 + 1 × 20

= 128 + 32 + 4 + 1

= 165

Try converting the following numbers from binary to decimal: 0b1000, 0b10101, 0b1000000, 0b11111111.

Try converting the following numbers from binary to octal: 0b100, 0b1000, 0b10111, 0b100100100

Is there anything interesting about how different binary numbers equate to octal numbers? What shortcuts can be used to convert between those two systems?

Finally, let's look at counting in hex. This is the opposite of the previous two systems; rather than removing symbols we can use, we need to add some extra ones. For example: 0x0, 0x1, 0x2, 0x3, 0x4, 0x5, 0x6, 0x7, 0x8, 0x9, 0xA, 0xB, 0xC, 0xD, 0xE, 0xF, 0x10. The benefit of using hex is that one nibble (4 digits) of binary fit into 1 digit of hex (eg: 0b1111=0xF). Here's the conversion of a hexadecimal number to decimal:

0x5A2F

= 0x5000 + 0xA00 + 0x20 + 0xF

= 5 × 0x1000 + 10 × 0x100 + 2 × 0x10 + 15 × 0x1

= 5 × 163 + 10 × 162 + 2 × 161 + 15 × 160

= 4096 + 2560 + 32 + 15

= 6703

Try converting the following numbers from hex to decimal and binary: 0xA0, 0x32, 0x200, 0xFF.

Is there anything interesting about how different hex numbers equate to binary numbers? What shortcuts can be used to convert between those two systems?

Game Devember was intended to be a game development challenge similar to Inktober. Instead of drawing a new piece each day, participants would develop a game over the course of the month by adding one feature each day.

There were only a few participants in the event, but considering the event was announced so late and my social reach isn't very large, having any participation outside myself was all I could hope for. One example I'm aware of would be Vac-Attack by my friend, Brandon Stam; it's fairly impressive that anyone was able to finish their project, so check it out if you get the chance.

Personally, I learned a lot about my own development stamina and how to effectively estimate the scope a project and individual tasks. While I never got to finish my own entry, basically every task that I had determined would take a full day did take a full day - no longer and no shorter. If you look at the Trello board I made for myself, you'll notice that a majority of the cards are just to set up systems. Notable examples include:

I found myself running out of steam to work on my project fairly quickly. By the end of the first week, I realized the project was either:

By the end of the second week, I realized I didn't have the energy to finish the project. I initially decided to shrink the project to fit the allotted time, but even then I found myself working 4-6 hours on the project every day (and that was after working full 8 hour weekdays). I feel that while I could keep up the hard-core work ethic for a week or so, a full month is a bit too long to burn the candle at both ends.

I realized quickly that a big reason that more art-focused challenges like Inktober work are because an artistic piece (be it music, digital art, physical art, etc.) have no explicitly required "end" state, so they can have a low time-commitment as a result. If you're drawing one art piece every day, you determine when it's done, so if you need a day off you can do a quick sketch instead of a fully rendered work. If you're programming one feature every day, the feature needs to be functional (and mostly bug-free) to be done, meaning you can't cut corners or re-scope any individual day of work.

Another major difference that results from working on a single project over a long period as opposed to multiple disconnected projects is that work compounds. You need to finish your work each day, or else the rest of the month will be harder (if it's even still completable). This was another major demotivator for me, personally, since I had to force myself to keep working late into the night because if I didn't, I wouldn't have time to finish my project. If each day's work was disconnected, skipping one day to rest or just doing half of a day's work and calling it there wouldn't screw me over for the rest of the challenge.

The biggest takeaway I think could be implemented in future, similar events would be that requiring compounding work should be avoided at all costs. Some methods for doing that involve the following:

I probably won't be hosting another iteration of the event this year. For those interested, there are other similar events (especially in December due to the advent-calendar theme) and there's no reason anyone can't challenge themselves with the same ruleset I laid out last year whenever they want. Personally, I'd rather focus on other long-term time investments at the moment, so small game-jams won't be my focus for a while. That being said, I wouldn't have nearly the experience I do - both in game development and programming in general - without game-jams, so I will continue to highly encourage everyone to participate in game-jams if they can, especially if you haven't participated in one before.

Back Talk to me about this postFirst, I'll start by sharing an example code snippet

// C++17

#include <string_view>

constexpr std::string_view k_password = "Hello"sv;

// Compares each byte one at a time

// Simpler code, but slow to test every byte

constexpr bool test_singles(const std::string_view& input)

{

if (input.size() != k_password.size())

return false; // Sizes must match for the input to match

// Loop over all bytes one at a time

for (const char *a = &input.front(), *b = &k_password.front(), *end = b + k_password.size();

b < end;

++a, ++b)

{

if (*a != *b)

return false; // One of the bytes didn't match

}

return true; // Didn't fail any tests

}

// Compares groups of 4/8 bytes while possible

// Longer code, but up to 8x faster

constexpr bool test_groups(const std::string_view& input)

{

if (input.size() != k_password.size())

return false; // Sizes must match for the input to match

const std::size_t *a = reinterpret_cast<const std::size_t*>(&input.front()),

*b = reinterpret_cast<const std::size_t*>(&k_password.front());

std::size_t remaining = k_password.size();

// Loop over groups of 4/8 bytes (32-bit/64-bit CPU)

for (; remaining > sizeof(std::size_t); ++a, ++b, remaining -= 4)

if (*a != *b)

return false; // A group of of bytes didn't match

// Loop over all remaining bytes (0-3 tests) one at a time

for (const char *ca = reinterpret_cast<const char*>(a), *cb = reinterpret_cast<const char*>(b);

remaining > 0;

++ca, ++cb, --remaining)

{

if (*ca != *cb)

return false; // One of bytes didn't match

}

return true; // Didn't fail any tests

}

This is a fairly simple optimization in concept, but might look a little complicated for those less experienced with C++. In short, comparing two strings (aka: any two contiguous arrays of bytes, not just ASCII strings) one byte at a time is unreasonably slow if the memory is larger than a few bytes. We can speed up the comparison by testing multiple bytes at a time; in fact, it's most efficient to use whatever the size of our hardware registers are (4 bytes for 32-bit CPUs, 8 bytes for 64-bit CPUs) which is why I'm using std::size_t instead of explicitly long or long long (though the standard int should work just fine).

By comparing bytes in groups of 4 or 8 at a time, we've effectively sped up arbitrary memory comparisons by that much as well. Technically, this will give you an even greater speed improvement than that since we're skipping other instructions for each loop:

One caveat that should be kept in mind is that modern compiler optimization (especially with liberal use of constexpr and STL algorithms like std::find_if) will likely lead to slow byte-for-byte for loops being replaced with this style of assembly, anyway, (at least when optimizations have been enabled), but I feel understanding the optimization - both that it exists and how it can be applied by yourself and by your compiler - is important enough to write about it.

Let's get it out of the way, here's the full header file. Note: This is intended to be used with Visual Studio hence the MSVC-exclusive call to __debugbreak().

// Debug.h

#pragma once

// Code for debugging

// Only functions in Debug builds

#define STRINGIFY_INTERNAL(x) #x

#define STRINGIFY(x) STRINGIFY_INTERNAL(x)

#ifdef _DEBUG

// Functions as a breakpoint in Visual Studio

#define DEBUG_BREAK() __debugbreak()

// Will break execution if `value` does not evaluate to true

#define DEBUG_REQUIRE_TRUE(value) if (!bool(value)) DEBUG_BREAK()

// Will break execution if `value` does not evaluate to false

#define DEBUG_REQUIRE_FALSE(value) if (bool(value)) DEBUG_BREAK()

// Used in the body of a function to mark that it is not implemented yet

#define DEBUG_NOT_YET_IMPLEMENTED() __pragma(message(__FILE__ "(" STRINGIFY(__LINE__) "): warning: " __FUNCTION__ " has not been implemented yet!")) DEBUG_BREAK()

#else // Not building with debugging macros

#define DEBUG_BREAK()

#define DEBUG_REQUIRE_TRUE(value)

#define DEBUG_REQUIRE_FALSE(value)

#define DEBUG_NOT_YET_IMPLEMENTED()

#endif

Putting DEBUG_REQUIRE_TRUE(a == b); and DEBUG_REQUIRE_FALSE(a == b); all over my projects means I can catch errors early and the specific test that failed being right there makes it really obvious what specifically failed.

I can safely use the strategy of "call a function and implement it later" by putting DEBUG_NOT_YET_IMPLEMENTED(); into the empty function instead of leaving it empty and I get a message printed whenever I compile reminding me that it's still empty and at what file/line the function lives.

By wrapping it all in #ifdef _DEBUG means when I compile in Release mode all my tests get removed anyway, so I can put as many safety checks as I want and know it won't slow down the project at all in the end.

In the end, this is a really simple/short file, but it's made my life debugging issues significantly easier since I started using it.

Back Talk to me about this postArtists draw a piece every day for October, so I'd like to propose game devs make a project and add one feature every day for December and release the result on New Year's.

Itch.io Jam Page: https://itch.io/jam/gamedevember-2020

Here's a list of features you could include in your game:

I've always been fascinated by the idea of user-generated content in games and tools. In a game I made called Miniplex (unfortunately now lost to time, but inspired by the popular Minecraft server "MinePlex"), I created a simple modding API for players to create and share their own characters and items. More recently in my game Pocket World I implemented a simple scripting system for dialogue with the idea that a player could create their own simple quests by modifying scripts.

\j\mFelia Town=true0013

Man: Yo wait up. (Press '\iAction')

\p: What?

Man: Figured I'd welcome you to Felia.

\p: Oh, sure. Thanks.

Man: Also, if you see anyone you want to talk to or

Man:signs you want to read, go ahead and press '\iAction'.

\p: ...How do you know what that means?

Man: ...

Man: I... I don't... know...

\wOff

\j0000=00000019

\e

Man: Hey! What do you think you're doing?!

\p: What do you mean?

Man: This world starts shifting and then you show up...

Man: Don't you act like you didn't change anything.

Man: I've got my eye on you...

\p: ...

\wOff

\fwoodplease.scene

Recently I was asked to help create a framework for a modular audio synthesis program. I felt this would benefit from a robust modding API for creating new modules, so instead of creating a purely data-based solution (eg: JSON or XML) I decided to implement full C++ support via the dynamic loading of Windows DLLs.

Unfortunately, the program is currently not open-source, so I can't share a majority of its code, but I can still share some more generic snippets. If that changes I will add a link to the repository here.

The first stage in implementing the API was creating multiple Visual Studio projects within one solution to make multi-stage compilation easier. I started by making three projects which build in the following order:

The two most important things to notice about that list are that:

While I won't be showing all of the code for this class, I'll still be going over what's important for the core modding API.

// Module_Base.h

class Module_Base;

typedef std::shared_ptr<Module_Base> (*ModuleGenerator)();

class FRAMEWORK_DLLMODE Module_Base

{

public:

template <class T>

static std::shared_ptr<Module_Base> Create()

{

std::shared_ptr<T> r = std::make_shared<T>();

r->Init(r);

return std::dynamic_pointer_cast<Module_Base>(r);

}

virtual ~Module_Base() = default;

virtual bool Update() = 0;

// ...

protected:

virtual void Init(std::shared_ptr<Module_Base> self)

{

m_pointer = self;

// ...

}

// ...

std::weak_ptr<Module_Base> m_pointer;

// ...

private:

// ...

}

A handful of notes on this base class:

FRAMEWORK_DLLMODE is __declspec(dllexport) when building the library, __declspec(dllimport) otherwise

Module_Base::Create() will be used to construct any derived modules. This way we can make sure Module_Base::Init() gets called.

m_pointer is actually a std::weak_ptr referencing the owning module instance (aka: this). This is so modules can easily obtain a std::shared_ptr of itself for functions that require it. This is purely because I heavily relied on smart pointers for the memory management of the program, so any functions that would normally take a raw pointer are made to take a smart pointer instead. Storing it as a weak_ptr is important, because it allows us to create new shared_ptr copies without locking the reference count to a minimum of 1 (which would prevent the object from ever being destroyed).

To avoid cluttering this explanation, I'll stick to only explaining the loading of external modules, meaning the following code won't actually do all that much on its own, but it will help get the idea across regardless.

First, I'll create a ModuleManager class to load and track each of the loaded DLLs:

// ModuleManager.h

class ModuleManager

{

public:

typedef std::vector<std::shared_ptr<Module_Base>> ModuleList;

typedef void (*RegistrationFunction)(ModuleManager* mm, void (*callback)(ModuleManager*, std::string path, ModuleGenerator));

void LoadAllDLLs();

static void Register(ModuleManager* mm, std::string path, ModuleGenerator generator)

{

std::vector<MenuItem>& currentItems = mm->menuItems;

currentItems[i].children.push_back(MenuItem(path, generator));

}

void Add(std::shared_ptr<Module_Base> newModule)

{

modules.push_back(newModule);

}

bool Update()

{

for (auto& module : allModules)

if (!module->Update())

return false;

return true;

}

//...

private:

struct MenuItem

{

std::string path;

ModuleGenerator generator;

MenuItem(std::string path, ModuleGenerator generator);

}

std::vector<MenuItem> menuItems;

std::vector<HINSTANCE> loadedDLLs;

ModuleList allModules;

//...

}

// Main.cpp

int main(int argc, char** argv)

{

ModuleManager mm;

mm.LoadAllDLLs();

while (mm.Update());

// ...

}

Now I'll explain the above code's design:

ModuleManager::ModuleList and ModuleManager::RegistrationFunction are just typedefs to make future code easier to read and write. I could have used std::function for ModuleManager::RegistrationFunction instead of a raw function pointer, but because I had not used std::function before when originally writing this class, I didn't feel comfortable experimenting with it at the time.

ModuleManager::LoadAllDLLs() will end up being called at the start of main. Ideally, this could be made into a more dynamic system that is constantly checking for new or modified DLLs to allow for hotloading code changes, but for my purposes loading all of the available DLLs at startup is sufficient.

ModuleManager::Update() simply runs Update() for each of the modules that exist, effectively returning module[0].Update() && module[1].Update() && ... && module[n - 1].Update()

ModuleManager::Register() shown above is significantly cut down from the actual implementation I used, but still highlights the intended functionality. Basically, I'm using it as a way to store a std::string, ModuleGenerator pair within my ModuleManager for future look-up.

ModuleManager::LoadAllDLLs:

// ModuleManager.cpp

#define NOMINMAX

#include <windows.h>

#include <shobjidl.h>

void ModuleManager::LoadAllDLLs()

{

WIN32_FIND_DATA FileFindData;

HANDLE hFind = FindFirstFile(TEXT("./modules/*.dll"), &FileFindData);

int count = 0;

HINSTANCE hinstDLL;

std::cout < "Loading module DLLs..." << std::endl;

if (hFind != INVALID_HANDLE_VALUE)

{

do {

std::wstring filenameW(FileFindData.cFileName);

filenameW = L"./modules/" + filenameW;

std::string filename(filenameW.begin(), filenameW.end());

std::cout << " Found file: " << filename << std::endl;

hinstDLL = LoadLibrary(filenameW.c_str());

if (hinstDLL != nullptr)

{

RegistrationFunction rf = (RegistrationFunction)GetProcAddress(hinstDLL, "RegisterModule");

if (rf)

{

std::cout << " Running registration function..." << std::endl;

rf(this, ModuleManager::Register);

loadedDLLs.push_back(hinstDLL);

++count;

std::cout << " Finished loading DLL!" << std::endl;

}

else

{

std::cerr << " Couldn't find RegisterModule function in DLL" << std::endl;

FreeLibrary(hinstDLL);

}

}

} while (FindNextFile(hFind, &FileFindData));

}

if (count == 0)

{

std::cerr << "Couldn't find any files!" << std::endl;

std::cerr << "Make sure to place module DLLs in ./modules" << std::endl;

return;

}

else

std::cout << "Loaded " << count << " modules from ./modules" << std::endl;

FindClose(hFind);

}

So let's break this down a bit. The Windows API actually gives us a pretty nice way of iterating through files that match a certain pattern with FindFirstFile(...) and FindNextFile(...), so I start by querying for all files in the ./modules/ directory ending in .dll, and given I find one (hFind != INVALID_HANDLE_VALUE) I continue iterating through the rest until I've read all of them (do {...} while(FindNextFile(...))).

Within that loop, I get the relative path of the DLL file and store it in filename/filenameW (Windows uses wide characters so I have to mess about to get them into a normal std::string to print it to the console). I can attempt to load the DLL at that path with LoadLibrary(...) and if it loads successfully, I manually request for a pointer to a function named "RegisterModule". I'll get back to that function later, but in order for this implementation to work, each DLL needs to implement its own function with the signature void RegisterModule(ModuleManager* mm, void (*callback)(ModuleManager*, std::string path, ModuleGenerator)). If the DLL contains that function, it gets called and given this (aka: the ModuleManager) as its first parameter and ModuleManager::Register as a callback parameter. We'll worry about the implementation of RegisterModule(...) later. Ideally, this should also be within a try {...} catch(...) {...} block so any exceptions thrown (for example if the ReigsterModule function's signature is incorrect) can be handled gracefully rather than resulting in the program crashing.

Once we've run the provided RegisterModule(...) function, the DLL's handle gets added to a list. This way, all of the loaded DLLs can be freed when the program shuts down (eg: in the ModuleManager's destructor). On the other hand, if no "RegisterModule" function was found in the DLL, we want to unload it immediately since there's no point in it taking up memory.

This program can now be compiled - we shouldn't need to modify it to add or modify any future modules. In reality, a way to create registered modules and add them to the ModuleManager::allModules list needs to be implemented, but I'll assume that already exists since it would be different for every use-case. In my case, I used the ModuleManager::menuItems list to create a drop-down menu bar with the library dearImGui.

This will be the main showcase of the modding API. First, we need to create the basic structure of our derived class:

// Module_Sine.h

extern "C" {

DLLMODE void RegisterModule(ModuleManager* mm, void (*callback)(ModuleManager*, std::string path, ModuleGenerator));

}

class DLLMODE Module_Sine : public Module_Base

{

public:

Module_Sine();

~Module_Sine() = default;

std::string MenuPath() const override;

bool Update() override;

// ...

protected:

// ...

friend Module_Base;

};

DLLMODE is simply defined as __declspec(dllexport) since we won't need to use the generated .lib file.

Module_Sine::MenuPath() should return a string unique to this module while Module_Sine::Update() will simply update this module every loop, returning false if the program should quit. In this example, it might make sense to compute the next value of a sine wave and store it somewhere to be used elsewhere.

Regardless, we'll finally need to implement the RegisterModule(...) function described earlier.

// Module_Sine.cpp

extern "C" {

void RegisterModule(ModuleManager* mm, void (*callback)(ModuleManager*, std::string path, ModuleGenerator))

{

Module_Sine module; \

callback(mm, module.MenuPath(), Module_Base::Create<Module_Sine>);

}

}

It's important that this function is contained within an extern "C" {...} block, since without it the function name would be mangled by the compiler and wouldn't be found by our previous call to GetProcAddress(...). We also have to construct an instance of Module_Sine to call Module_Sine::MenuPath() since it's not static (static functions can't be virtual or overloaded). The path could be hard-coded or Module_Sine::MenuPath() could be made static, but I find this is far more functional as it allows for MenuPath() to be called on future instantiations, even after they've been cast to Module_Base pointers.

To outline how this implementation works, I'll construct a timeline for function calls, treating the EXE and DLLs as multiple programs communicating. Time is on the vertical axis and starts at the top.

| ModularSynthProgram.exe | Module_Sine.dll |

|---|---|

Calls ModuleManager::LoadAllDLLs() |

Unloaded |

Calls LoadLibrary("./modules/Module_Sine.dll") |

Loaded |

Calls GetProcAddress(...) |

Provides internal address of RegisterModule |

Calls address given for RegisterModule(...) |

|

| Waiting | Runs RegisterModule(...) |

| Waiting | Calls callback function provided to RegisterModule(...) |

Runs callback of ModuleManager::Register(...) |

Waiting |

Returns from ModuleManager::Register(...) |

Waiting |

| Waiting | Returns from RegisterModule(...) |

Adds loaded DLL handle to loadedDLLs |

|

Calls the registered callback Create<Module_Sine>() |

|

| Waiting | Runs Create<Module_Sine>() |

| Waiting | Returns from Create<Module_Sine>() |

Adds new module to allModules |

As you can see, the whole system functions on a back-and-forth of callbacks being passed around so the exe can call functions in the dll and vice-versa. This way the already compiled exe doesn't need to be aware of what functions exist on any module DLLs, it asks them to register their own functions in a list along side names so they can be called later by the end-user. In a game setting, this could be used to, say, add new items or crafting recipes by having the DLL to register the recipe required to call one of its functions. Once that function is registered, the exe can call it later whenever it's necessary.

Back Talk to me about this postSo I learned something pretty fun about a week back: homosexuality was never actually mentioned in the bible until the mid 20th century.

Honestly, this shocked me. I've been so used to bigoted Christians claiming "my religion prevents me from serving gay people" or similar claims that "Christianity requires heterosexuality and condemns deviation" that I simply assumed that it was a long-standing teaching for thousands of years stretching back to the religion's Judaic roots, but apparently it's a very recent (and likely intentional) mistranslation. That's the TL;DR, but some people might want evidence, citations, or just a story; for that, keep reading.

So how did this happen?

Well, as most people should know, English hasn't always been the global lingua-franca most people are familiar with now (English more-or-less officially became the common international language mid World War II and modern English only fully developed a little after 1600 CE). Many might also assume that the Bible was originally written in Hebrew considering it stems from the Jewish Torah, but keep in mind that the Roman empire had domination of the eastern hemisphere at the time - the Roman the empire existed between 27 BCE and 476 CE while Jesus Christ was alive from 4-6 BCE to 30-36 CE. You might then assume that the lingua-franca of the time was Latin, since that was the most commonly spoken language by ancient Romans, but that would be analogous to believing that Mandarin Chinese is the modern lingua-franca because China has the largest national population or Russian is the lingua-franca because Russia is the largest country by landmass. In fact, the lingua-franca during the Roman empire was actually ancient Greek (likely because ancient Greece was heavily involved in international trade, causing their language to spread, though this is personal speculation).

So the earliest popular forms of the Bible found and translated from are actually in Greek, so how does this relate to the idea of homosexuality (or rather, the absence of it)? If you're familiar with the Lutheran denomination of Christianity, you'll know of the story of its founder, Martin Luther, who decided to split off from the Catholic church. For the unfamiliar, at the time most people were illiterate, meaning they couldn't actually read the Bible that was being preached to them (it didn't help that the copies of the book being circulated by the Catholic church was written in Latin which had long since died as a language). Luther was fortunate enough to be educated in Latin enough to read the scripture himself and found that many teachings being spread by Catholic clergy were either not present or greatly exaggerated (for example, the idea of being able to pay the church to absolve oneself of the guilt of sin). How this relates to the modern mistranslation of the scripture is that the 20th-century retranslators writing copies of the book in English (such as the Revised Standard Version) made the decision to intentionally bend interpretations of the source text to fit their own worldviews believing that the consuming population would take it on faith and not attempt to read the original source material - how many Christian American: a) know the source language/s and the cultural context surrounding it/them original transcription; b) have reasonable suspicion that the religion they've been taught all their lives has been intentionally skewed; and c) have the conviction to obtain a copy of the Bible in the original versions and take the time to retranslate it?

So, finally, what's the mistranslation? Well, the passage I'm going to draw attention to is 1 Timothy 1:10. In the English New International Version, it reads:

"for the sexually immoral, for those practicing homosexuality, for slave traders and liars and perjurers--and for whatever else is contrary to the sound doctrine"

So where did "homosexuality" in that verse come from? Well, taking a look at Martin Luther's translation from Latin to German, he used the word "Knabenschander." The original Greek uses the word "arsenokoitai" which has the closest Latin translation of "paedico." So the order of translations of this word are "arsenokoitai" (Greek, by St. Paul some time between 40 CE and 67 CE) to "paedico" (Latin, translated some time in the 4th century) to "Knabenschander" (German, translated by Martin Luther in 1534) to "homosexual" (English, Revised Standard Version translated in 1946). I haven't given translations for the previous source words yet, but the bilinguists reading might have caught on already: "arsenokoitai" is a bit too early to have a concrete translation, but the closest Latin of "paedico" which means "pederasty" (literally "sexual activity involving a man and a boy"). "Knabenschander" translates directly as "boy molesters."

That's right, in the mid 20th century, international English versions of the Bible subtly switched out "pedophile" with "homosexual."

So what're the takeaways? First, if you (or someone you know) believed up until this point that "the Bible says gay people go to hell," it doesn't and this is the time for you to reevaluate your position on the topic. Second, any time someone uses "I've read the Bible" or suggests you do the same as a dismissal of a claim, ask them which version they read. Finally, it can now confidently be said that anyone who chooses to continue to make any negative claims against members of the LGBT community citing Christian backing after having this explained is simply a bigot who doesn't feel comfortable admitting it.

Happy pride month, everyone.

This file is to showcase the use of the relatively new constexpr keyword to allow for processing of C-style strings at compile time.

In turn, this allows for the use of C-style strings as cases in a switch statement. This is something that is typically impossible as C-style strings effectively degrade into void* in this situation as no string comparison is normally possible.

An example of why this would be useful would be in an assembler or other simple language interpreter to improve interpretation time from O(n) in an if {} else if {} chain to O(log(n)).

Another benefit over using enums is the lowered reliance on consistancy across multiple files. With this solution, enum definitions need not be created updated to reflect the use in functions.

A tradeoff, however, is the lack of transparancy. This can be positive or negative, depending on the circumstance; an open-source project might require more descriptive documentation to be available, but closed-source distributables could keep more of their inner-workings private.

#include <iostream>

#include <string>

typedef char byte;

constexpr unsigned long long hashString(const byte* str)

{

unsigned long long r = 1435958502956573194;

sdbm

while (*str)

r = *str++ + (r << 6) + (r << 16) - r;

return r;

}

unsigned long long hashString(const std::string& str)

{

return hashString(str.c_str());

}

int main()

{

std::string input;

std::cout << "Input string: ";

std::cin >> input;

std::cout << "You gave me: " << input << std::endl;

std::cout << "Hash: " << hashString(input) << std::endl;

switch (hashString(input))

{

case hashString("Hello"):

std::cout << "Well, hi!" << std::endl;

break;

case hashString("Bye"):

std::cout << "Oh, ok..." << std::endl;

break;

}

return 0;

}

Recently, a bug was discovered allowing non-Nitro users to use emotes across servers by abusing colons (:) and graves (`). The common method of this is as follows:

:`:emote:`:

Although, I've tested other formats to try to reverse engineer the bug that also work, but are less stable such as:

:`::emote:`:

Note the missing : after emote

For example, the emote pikcry of the Pikmin community discord is printable with

:`::pikcry::`:

to achieve this effect: (This is an old post from when I wasn't hosting my own images, so unfortunately the images have been lost to time).

Simply put, the bug is due to a misinterpretation of emote syntax and code syntax. Both Reddit and Discord use the

standard markdown format for code utilizing graves (`). This syntax is meant to allow text to be sent without being

interpreted so you can describe things like **bolding** (bolding) or general code that

is typically hard to share with markdown.

`code`

Discord also allows for emotes with the :emotename: syntax and an interesting quirk of the

implementation of this syntax's interpretation is that if the requested emote does not exist, the text won't be replaced

This is where the problem lies. By surrounding a grave with colons, it's considered an emote (Note that it is impossible to name an emoji ` as it will be replaced with __). This causes them to not be interpreted properly meaning when you surround an actual emote with an "emoted" grave, the initial interpretation considers it surrounded by code and ignores checking to see if the user has permissions to use it while the emote interpretation sees that the surrounding graves have been canceled out (as they're emotes, not normal text) and so it replaces the inner emote as though the user has permission to use it.

The bug can also be seen with emoji named __. __ is normally supposed to underline text

(although it works differently on Reddit), meaning an emoji with the name __ should underline text if it

is closed anywhere else in the message. However, this does not happen as to avoid incorrectly displaying text

in messages. This created the oversight that allows this non-Nitro emote sharing to occur. Let's use the

following example to break everything down:

:`::emote::`:

\_/

Interpreted as an emote, but that emote doesn't exist so leave it unchanged.

:`::emote::`:

\_________/

Interpreted as `::emote::` meaning nothing's supposed to be changed as it's code.

:`::emote::`:

\_/

Interpreted as an emote, but that emote doesn't exist so leave it unchanged.

:`::emote::`:

\_____/

Interpreted as :emote: meaning it should be replaced with <:emote:,EMOTEID>.

:`:<:emote:EMOTEID>:`:

\______________/

This is supposed be drawn by the client.

I'm actually surprised it's only been recently discovered that this bug exists, although it's understandable how it could make it through testing. An easy fix would be making it impossible for graves to be interpereted as emotes (as it's impossible to have an emote with a grave in the name anyway).

Quick legal thing just to be safe: This post is not in violation of rule 8. Discord's ToS states under "RULES OF CONDUCT AND USAGE" that:

you agree not to use the Service in order to:...

...exploit, distribute or publicly inform other members of any game error, miscue or bug which gives an unintended advantage...or promote or encourage...hacking, cracking or distribution of counterfeit software, or cheats or hacks for the Service.

I would like to be clear that this information is for educational purposes only and I am in no way encouraging the use or exploitation of this bug.

Back Talk to me about this post